Here's a transcript and slides of the talk I gave at IXDA Interaction '23 about Community Notes.

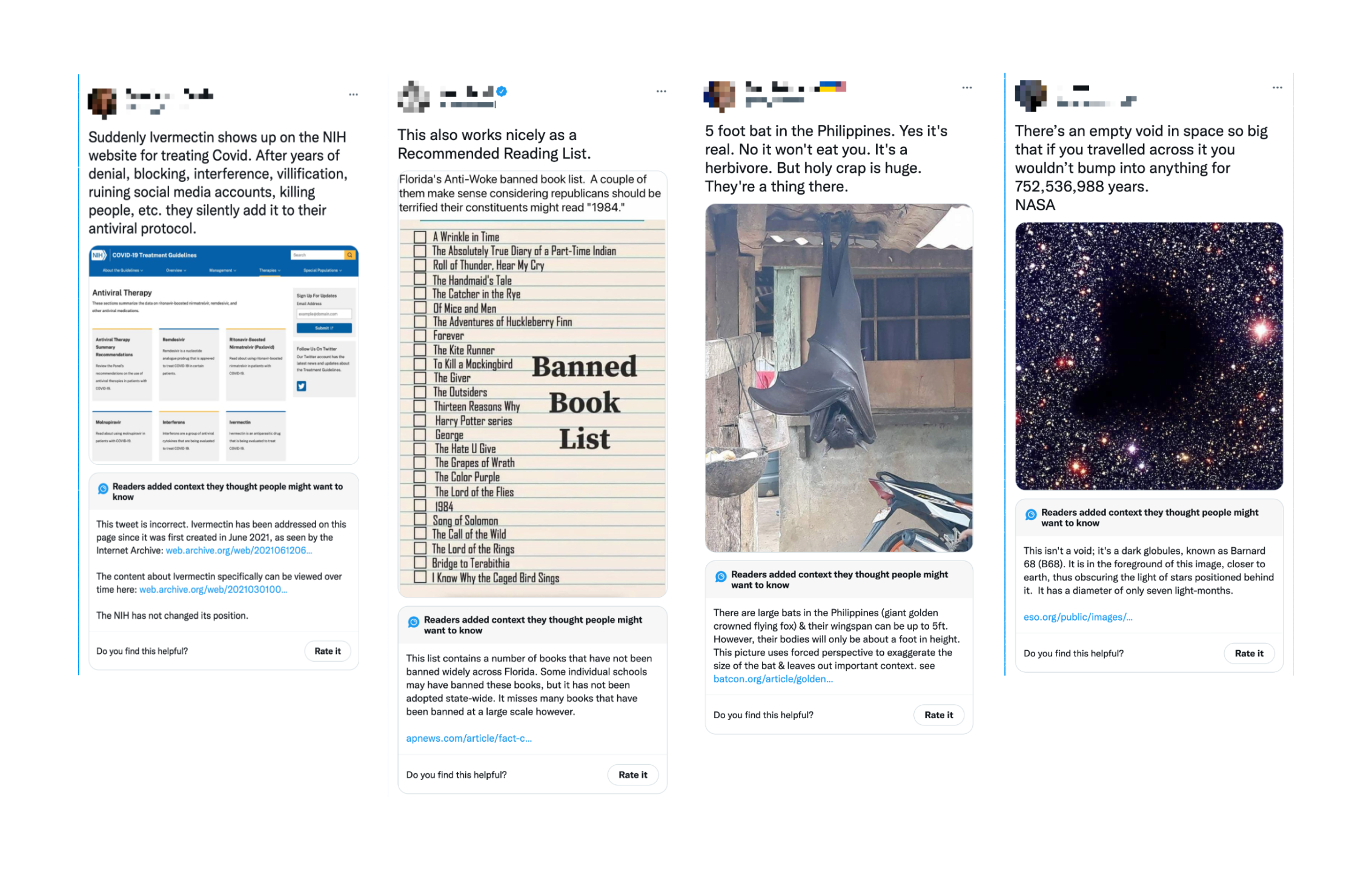

We're all familiar with the overwhelming problem of misleading information online. The example here on screen is a silly one, but we all know, by now, how harmful viral misleading content can be on the internet.

In recent years, platforms have invested heavily in building out a portfolio of solutions to address misinformation, spanning:

These solutions are all important developments, but they eventually come across 3 key challenges:

First of all, scale: for global platforms, it is nearly impossible to enforce policies on all content that is posted, in all languages. This means that moderation teams end up with many thousands of reports a day to review, and have to somehow prioritize this work, inevitably leaving some of it behind.

The second problem is speed. Because platforms like Twitter happen in real-time, new Tweets or posts get most of their views within the first hours after they are published. This means that many times, even the most efficient moderation teams can be too late to have a significant impact on reducing the spread of misleading content.

And last but perhaps more importantly, moderation decisions made by platforms inevitably feel like a black box and biased by some segment of the audience. Many people don't trust institutions like tech companies and media companies with the power of moderation.

By defining the problem in these terms, we can see a clear opportunity: give the power to the people.

We're all familiar with products that benefit from the wisdom, input, and moderation from the crowd: from Wikipedia, to the maps we use everyday to choose a restaurant, to chatrooms and forums that are moderated by the users themselves.

About 3 years ago, a team was formed at Twitter to explore crowdsourcing as a solution space for the problem of misleading content.

During the development of Community Notes, our strategy to find our design was to adhere to a few principles that we thought directly addressed the key issues I highlighted earlier (scale, speed, and trust).

First, to address scale, we need to harness the wisdom of as many people as possible, covering many geographies, languages, and topics.

Then, to earn speed and trust, we need the community itself to make all decisions – this means no human review or internal processes at Twitter that can slow it down, become a bottleneck, or feel like a black box to the public.

And perhaps more importantly, we decided that in order to fully earn people's trust, everything about the program should be transparent and open to the public, from the code powering the algorithm, to the data running through the system, and also all decisions and changes we made to the product.

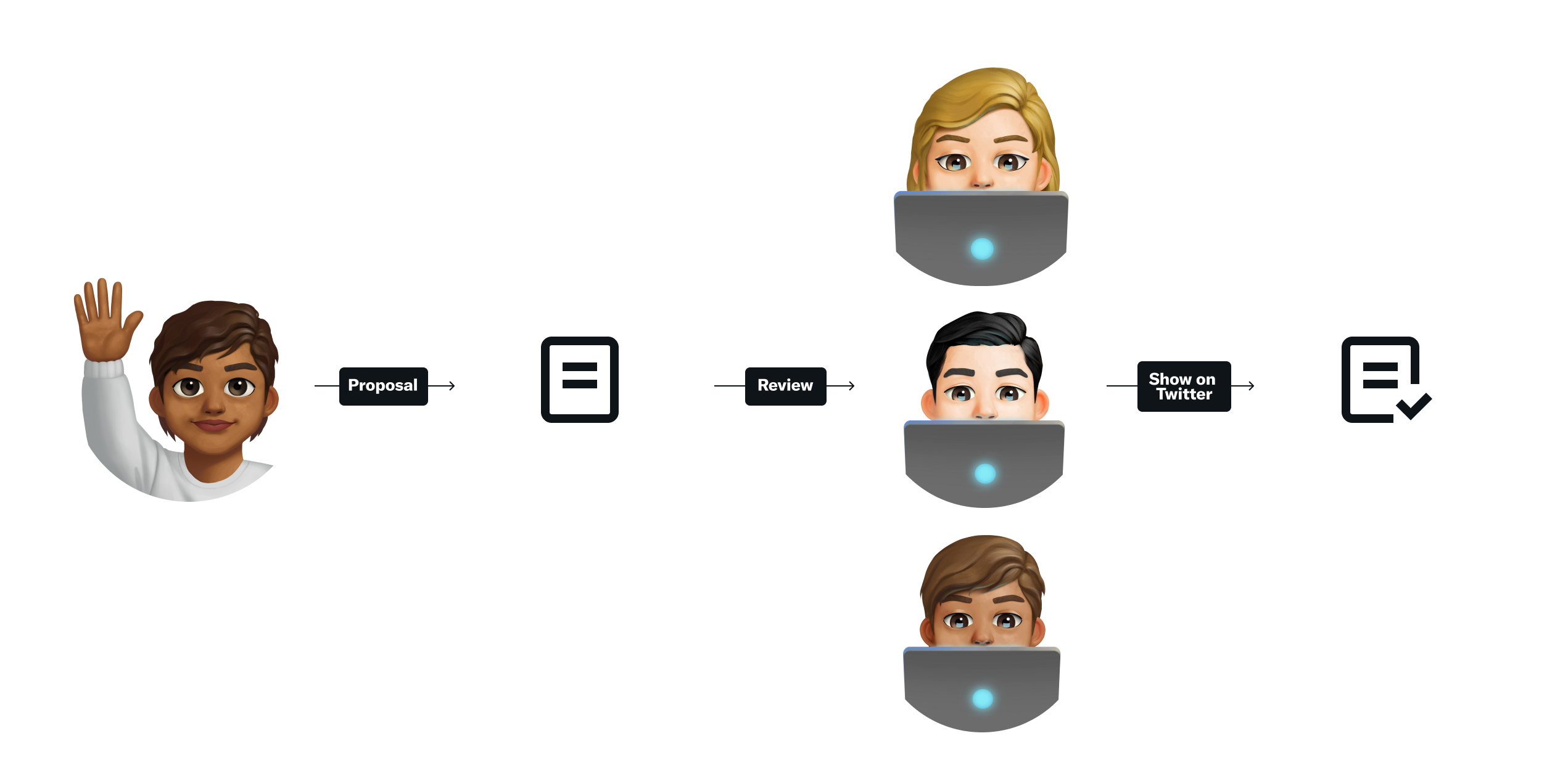

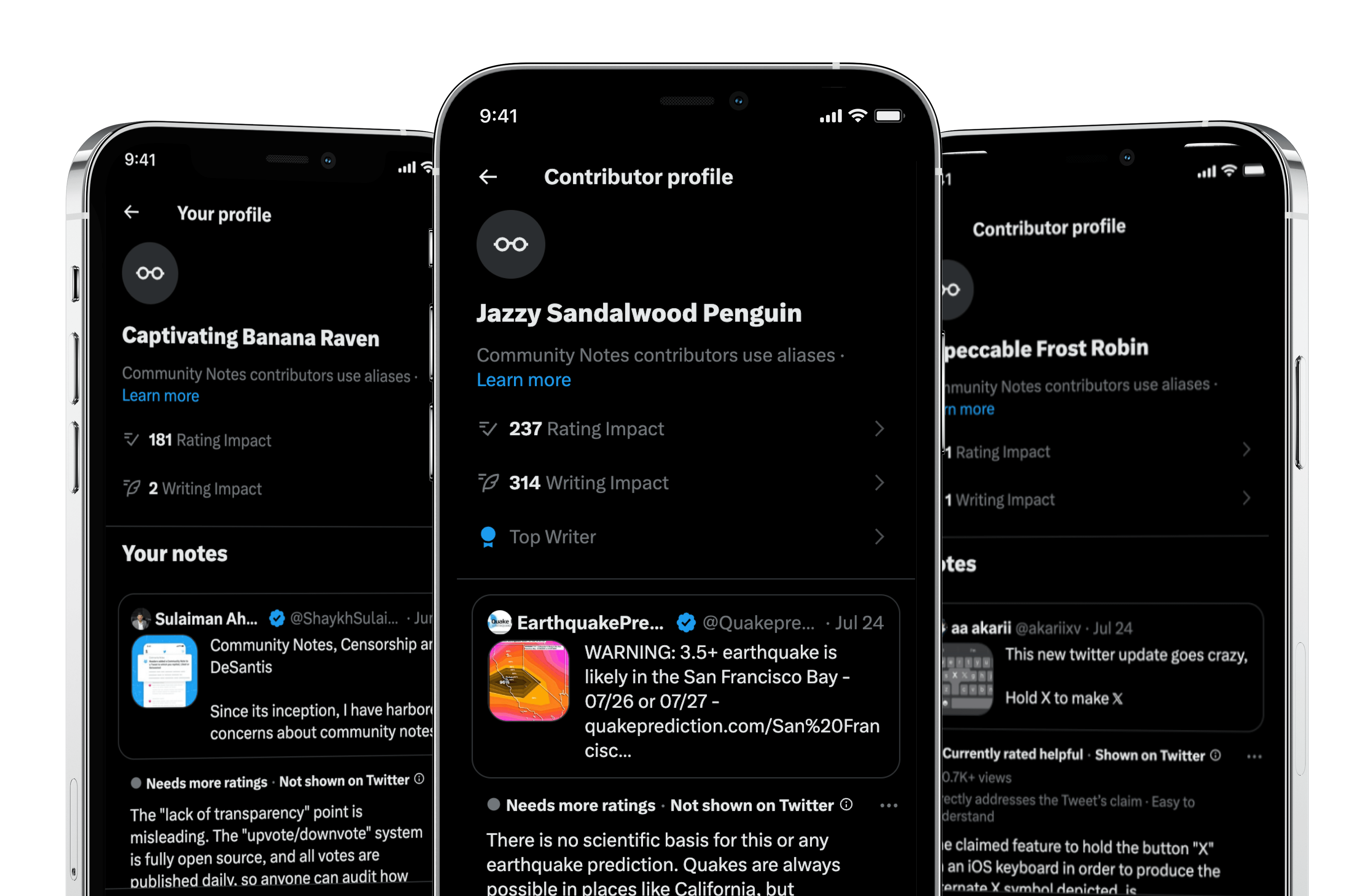

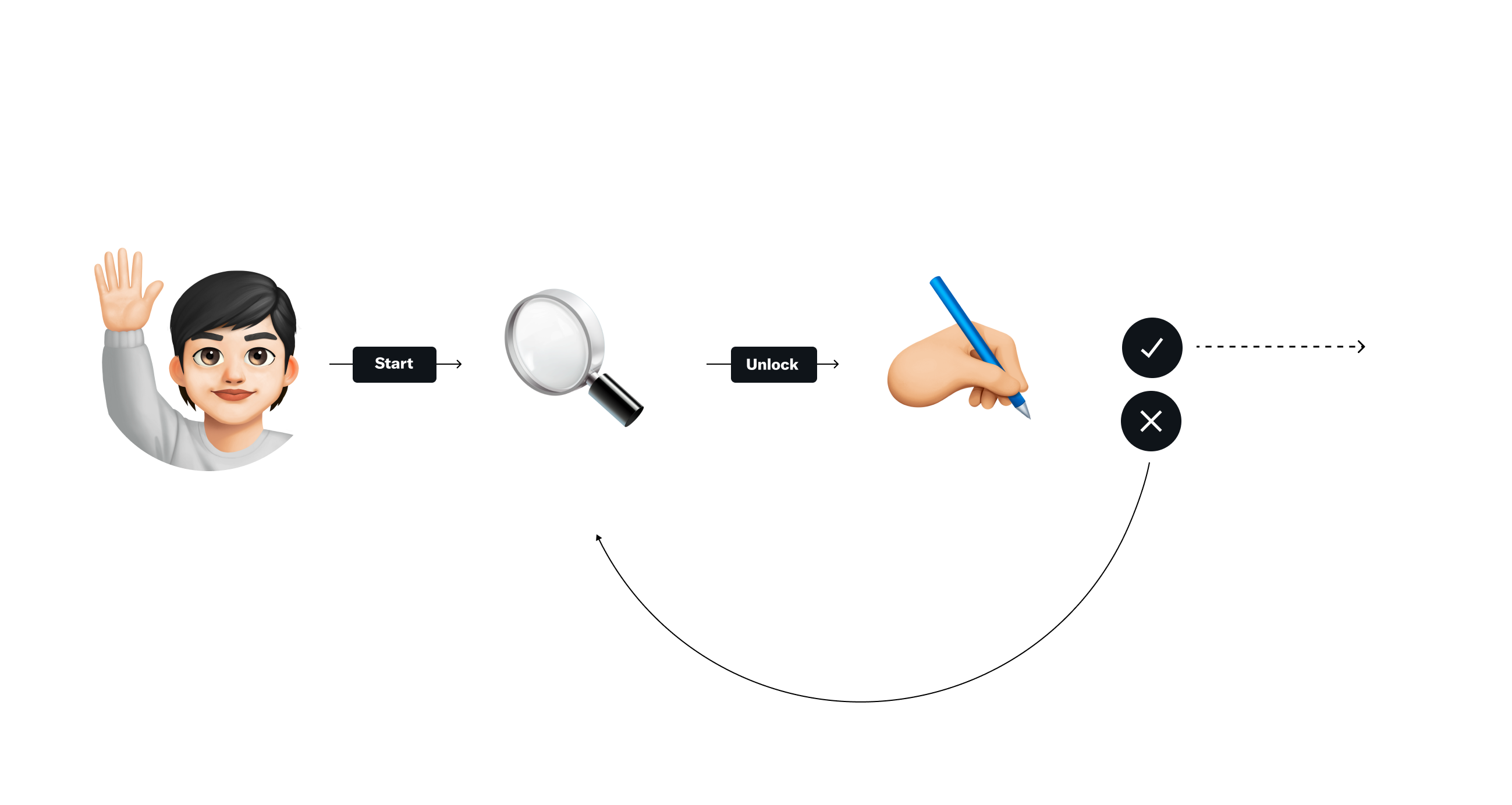

At a high-level, our system empowers contributors, (who are people on Twitter just like you), to find Tweets they believe are misleading, and write proposals for notes that add context to them.

Those note proposals are then sent to other contributors who can rate them. And if enough raters from diverse perspectives agree that the note is helpful, the note gets shown directly on the Tweet for everyone else.

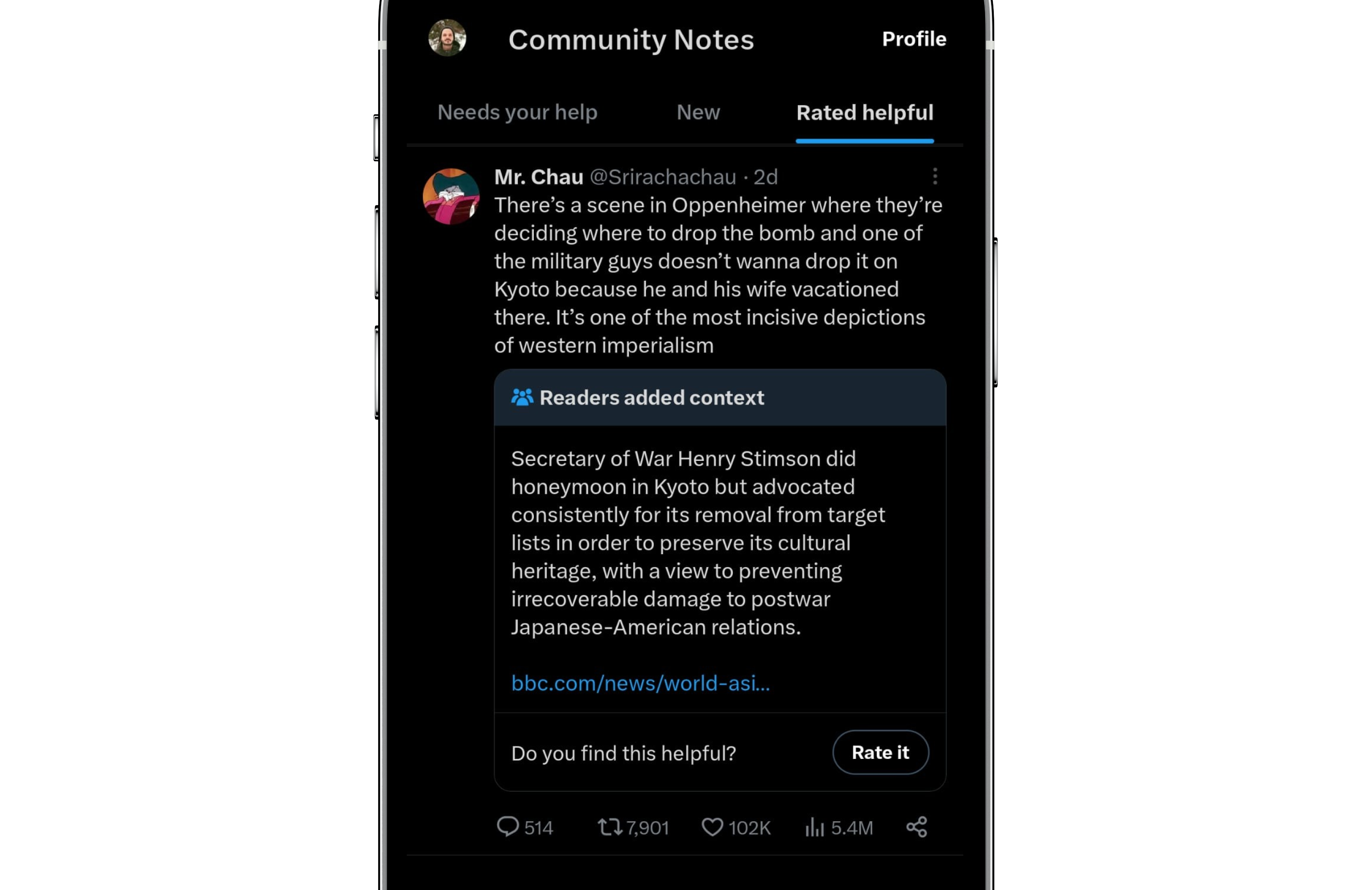

The goal, then, is to help contributors write and rate notes that are helpful enough to be shown directly on the Tweet, like this:

It seems like a simple enough system design, but implementing it has come with many challenges, and I'd like to share some with you today.

First of all, it was paramount to us that people from all backgrounds felt safe contributing.

In our first versions, contributors used their real Twitter profiles to write and rate Community Notes, and we quickly learned that this approach wouldn't work.

Our most eager contributors quickly let us know that they had concerns about using their identities, especially because adding notes on highly viral Tweets could end up redirecting lots of attention to them, including harassment.

At the same time, recent academic research tells us that anonymity can promote less polarizing behavior online, because people are more open to different viewpoints when their identity is not directly associated with the content they're reading or writing.

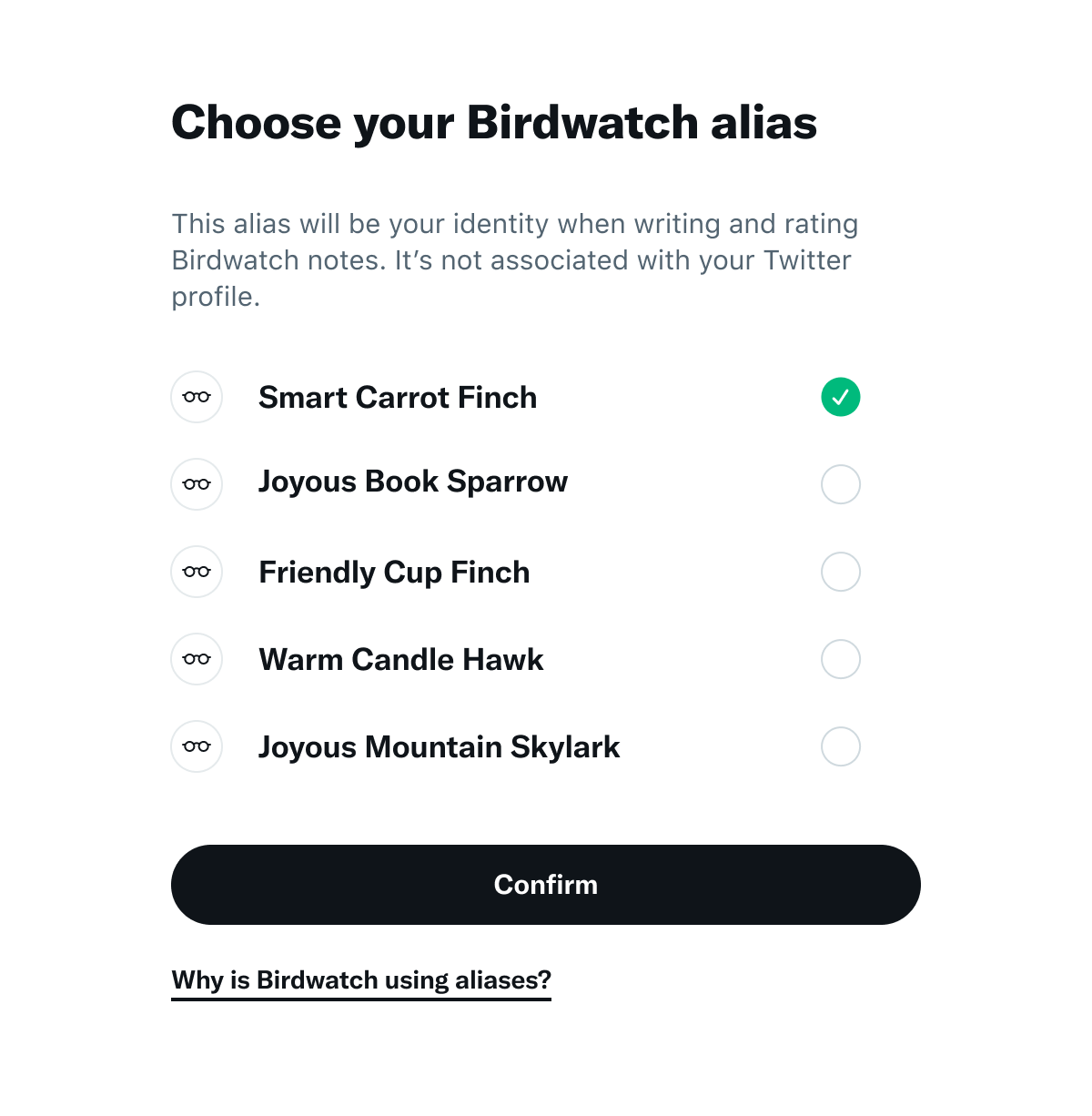

For these reasons, we built an entire new identity system in Community Notes, keeping contributor's Twitter identities private, while helping them focus on the merits of the content, rather than authorship.

Anonymity has many benefits, but it also comes with a series of challenges itself. The main one being reputation.

If the program is entirely run by anonymous accounts, how do we keep them accountable for the quality of their contributions? How can you trust people, if you don't know who they are?

This leads us to a second important design decision we built into Community Notes: Graduated access.

In the program, contributors gradually earn abilities according to their past contributions.

New contributors start only with the ability to review other people's notes. This gives them a chance to learn what makes helpful and not helpful notes, and what the community standards are.

After they get enough experience rating notes, they earn the ability to write their own notes.

If their notes are seen as helpful, they can keep writing notes, and if they're not, they can temporarily lose that ability and go back to rating only.

This system has been shown extremely effective at onboarding new users who are well-intentioned, while keeping the spammers and bad-faith actors out.

Another incredibly tricky question in building a system like this is how do you ensure that the outcomes are not biased or easy to manipulate?

If the helpful notes are chosen based on ratings from a group of anonymous users, doesn't that mean that the opinion of the majority will always win?

Or even worse, doesn't that mean that someone could coordinate with a group of people who agree with them, in order to remove, or promote, a certain point-of-view?

The solution to this issue comes from a growing field of social and computer science called bridging.

In most social media products, the algorithms focus on finding and promoting content that has been liked by many people, or by people that are similar to you.

This approach works extremely well if your goal is to personalize or promote the most fun, engaging, interesting, or even controversial content, but doesn't do a great job of finding information that most people, including people who disagree, would find helpful, accurate, and reliable.

Community Notes' algorithm is totally different, in that it does not care for majorities or popularity.

What it looks for are notes that people from different perspectives can agree on.

It doesn't look for absolute consensus, and not everyone has to agree.

But our algorithm rewards notes that are written ways that bridge at least a few people that often disagree with each other.

Another important learning we had was how important visual design, copy, and presentation are. We've invested hundreds of hours of research testing designs, and learned that how we present these notes to people can be as important as the quality of the content in the note.

As an example, our initial designs were heavy on calling people's attention to how misleading the Tweet was, and as I said earlier, we used to present the note author's real identity upfront.

But over time, we learned that people, specially the most skeptical, are much more likely to learn from a note if we reframe the program as providing additional context, written by a group of people who are Twitter users just like them.

We also rebranded the entire product earlier this year, from Birdwatch to Community Notes. Even though some of our contributors had grown to love the original name, we learned that Community Notes elicited more positive reactions from those who are not contributors and see these notes on Twitter for the first time.

As I mentioned before, another key ingredient in our design is that all our code is documented and available on Github.

This allows other institutions, engineers and researchers to audit, spot any bias or bugs, and write their own improvements to how the system works.

We have recently published a paper that explains in depth how the algorithm works, and have seen other researchers publishing their findings as well.

So, does it work? What have we observed so far?

First, one of our most powerful and surprising learnings was how wide Community Notes can go in terms of coverage.

While our past and current policies only deal with very specific topics, Community Notes can cover any Tweet, independent of what policies are in place. We've seen Notes adding context to Tweets about manipulated videos out of context, random internet curiosities, all the way to the most pressing topics in breaking news, such as the recent Earthquakes in Turkey, education, political, financial, and health-related topics.

Well, what about quality? Are these notes helpful?

Our surveys with populations of Twitter users show that most people find Community Notes somewhat or extremely helpful.

We've also learned that the notes are informative, so that they actually change people's understanding of topics.

Our surveys show that People were 20-40% less likely to agree with misleading information in a Tweet after reading the note about it.

What's more, there’s no statistically significant difference between self-declared Democrats, Independent and Republicans. This means our bridging algorithm is successful in finding notes that people from different perspectives can agree on.

What about facts? Are the notes accurate?

In partnership with professional fact checkers, we have found that nearly all notes are highly accurate,

Finally, we also find that these notes change how people choose to behave on Twitter.

Our data shows that people are 15 to 35% less likely to Like or Retweet a Tweet when a Community Note is visible.

This are only first-order effects we can measure, and the potential impact on reducing the spread of this type of content could be much larger than this in practice.

We're currently focused on expanding the program to all countries and languages, which in itself is a huge challenge.

We also see opportunities to apply our approach in other ways, such as attaching notes to viral pieces of media like videos or articles, and bringing the crowdsourcing, bridging and open-source principles to other surfaces in the product.

To conclude, I'd like to salute the interaction design community for giving us the space and attention to share what we've learned, and I hope that by doing our work transparently we can inspire you all to pursue new solutions to the problems we see in the internet today.

If you want to learn more, follow us @CommunityNotes, check out our open source code in Github, and signup to become a contributor if you want to help keep others informed.

This website was built using Obsidian, Eleventy and Vercel.

The text is set in Untitled by Klim Type Co.